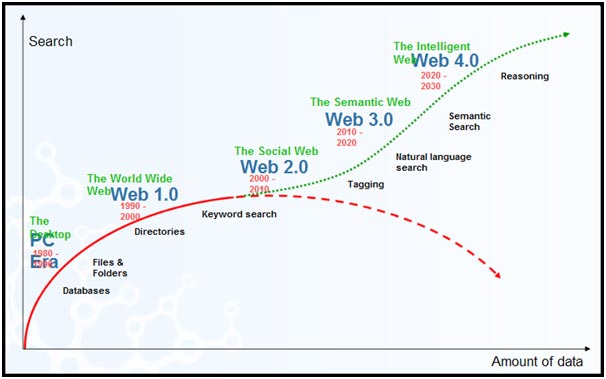

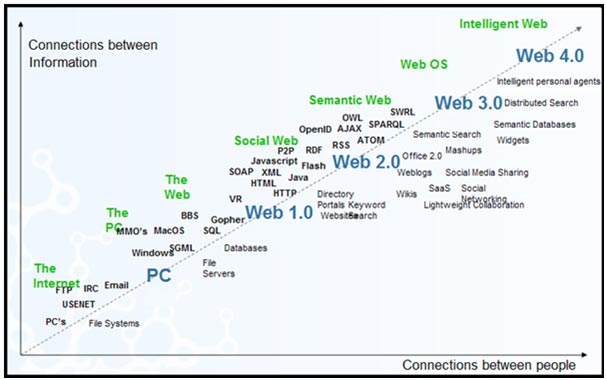

Even with mankind firmly in the 21st century and more than four decades after HAL from Space Odyssey 2001 showed humans the way to go, it seems unfathomable that the everyday ability to converse with machine information in natural language is not a reality after all these years.

Wouldn’t it be amazing if we could get computers for instance to read Shakespeare’s plays and perhaps re-write them using different words – and not just by applying synonym replacements or related concepts? Imagine even a machine giving us a completely “new” play in Shakespeare’s writing style? Just think of the impact that reaching this technological level would have on a rapidly exploding data world that hungers for increased semantic intelligence filtering capabilities.

Why are we not there yet?

What hasn’t been done and what represents the “holy grail” in semantic analysis on a technical level, is an efficient multi-word to multi-word grammar and semantic transformation in text input-output flow.

Current semantic (meaning in language data) technologies available are crippled by a serious flaw. It is difficult to generate a semantically and grammatically equivalent body of text from an existing repository of language patterns and word combinations. Additionally, sentence structure and logical meaning flow have to fit in with the physical and rational make-up of the world we live in.

The flaw comes in when we literally have to “show computers our world”. By attempting to “categorise” words or concepts beyond the English left-right, Arabic right-left, or Chinese up-down reading and writing order, most of the modern semantic intelligence technologies delivers a level of complexity that is unsustainable in terms of permutations.

By laying down logical concept rules, such as “a dog is alive” and “things that are alive replicates” giving us “a dog replicates”, current technologies hope to be able to create systems that generate and perpetuate rules of logic – and eventually represent some type of “machine intelligence” on a level with human thinking.

Categorisation systems very quickly run into the “permutation problem”: imagine any sentence of about 8-10 words, i.e. “I really appreciate my mother in the morning”. What would happen if we replace let’s say each word with 10 equivalent words that fit both grammatically and semantically? i.e. “I definitely/positively/demonstratively…” “like/admire/love my mother…”. Taking the original word phrase and randomly inserting the replacement words in all possible groupings that still make sense, we get 100 million phrases that are ALL grammatically and semantically equivalent – and we are only still saying that we feel positive about our mother some time early in the day!

Even the smallest body of text of even minimum complexity, obviously has trillions upon trillions upon trillions of grammar-semantic equivalents. In the usage of these logical categorisation systems, we just do not have the concept-combination multiplication power to cover the permutation problem. World-wide effort since the 1980’s around ontological classifications, hierarchical categorisation, entity collections and logic rule based systems have therefore not succeeded quite as envisaged. We can think of CYC, OpenCYC, Mindpixels and Wordnet amongst many.

“Permutations” is the villain that everyone hopes will disappear with “just a few more categorisations…”

Alas, it will not.

What is needed is a small compact “semantic engine” that can “see” our world and that will enable trillions of concept permutations to adequately represent the resulting image.

With an abundance of data in a complex and highly unstructured web and without a powerful enough “engine”, we really don’t have much chance of ordering and classifying this data such that all concepts inside of it relates to everything else in a manner that resembles our real human world holistically.

The search is therefore on for a technology that could take a quantum leap into the future. If we can start by enabling machines to “rewrite Shakespeare”, we should be able to develop an innovative, ontology-free, massively scalable, algorithm technology that requires no human intervention and that could act as librarian between humans and data.

The day when humans are able to easily talk-to, reason and “casually converse” with unstructured data will lead to a giant leap in the human-machine symbiosis and – after far too long a wait – in our lifetime we can perhaps still experience a true Turing “awakening”.

To see a version of Shakespeare’s Hamlet re-written by a machine, have a look at…

http://gatfol.com/gatfolpower/strangerthanfiction.html

![]()