Gatfol is a South African origin provisionally patented (USA) Search Technology with built-in human language intelligence

Centurion, South Africa (PRWEB) April 26, 2013

Gatfol serves base technology to provide digital devices with the ability to process human natural language efficiently.

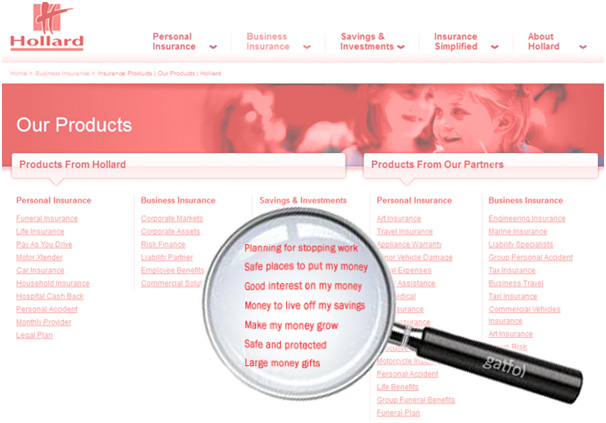

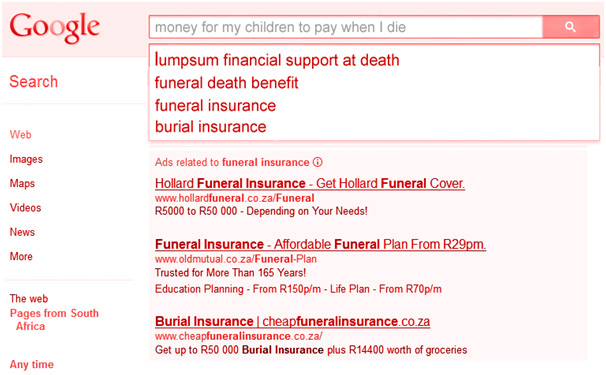

The goal of truly semantic search has not yet fully been realized. The main problem is the enormity of ambiguous word permutations of semantic equivalence in even the simplest of phrases, which up to now has processing-wise required huge structured lexicons and ontologies as guides.

Gatfol is developing its patented technology commercially to massively improve all keyword-based search in the millions of in-house and public online databases worldwide. A first fully integrated prototype has now been installed on a clustered network of twenty seven desktop computers in Centurion South Africa.

Founder and CEO Carl Greyling firmly believes that Gatfol technology is crucially needed by many digital processors worldwide. Without a Gatfol-type solution, further development in many large digital industries is difficult. These include: Online retail (Amazon, Staples, Apple, Walmart), online classified advertising (Craigslist, Junk Mail), online targeted advertising (Google, Facebook, Twitter, Yahoo), augmented reality (Google Glass), national security in-stream data scanning (FBI, CIA, most governments worldwide), abuse language filtering in especially child friendly online environments (Habbo Hotel, Woozworld), image- and video auto-tagging for security monitoring (most police forces worldwide), human-to-machine natural language interfaces (all web search engines like Google, Yahoo!, Bing, Ask) and web text simplification for disadvantaged web users.

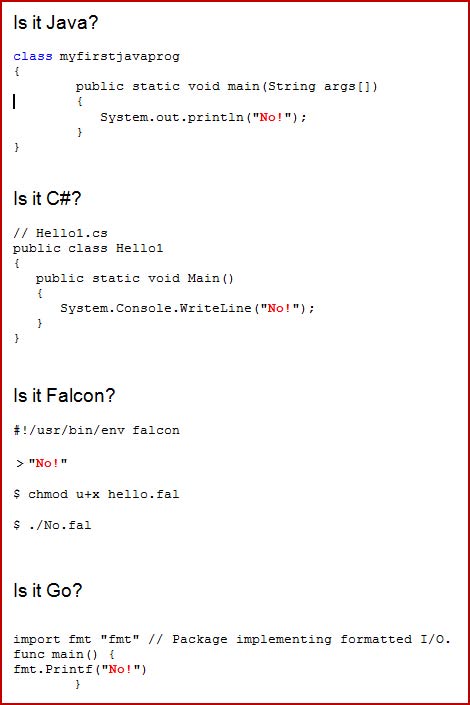

The Gatfol operational technology comprises multiple redundant local machine-based master and slave software nodes to process input in parallel to ensure extremely high throughput speeds at very large input volumes, regardless of machine- and CPU hardware configurations. This applies to the full production cycle from web RSS-based sourcing to microsecond delivery of output to calling applications. Processing speed increases are proportional to the volume of nodes applied.

Unlike almost all competing technology available today, Gatfol provides robust parallel processing power from even simple desktops or laptops. With all data streams staying local to the processing machine, application of Gatfol technology in especially security-based environments (ie battlefield deployments) is not compromised by networking- and online processing- or data transfer exposure.

With a simulated Hadoop multiple master-and-slave node architecture built around simple but robust Windows™ executable files and with multiple fall back redundancies around both master and slave functions, as well as all nodes individually carrying full word relationship databases, reliability of throughput is ensured – especially critical in large volume streaming functionality.

With a base in ordinary executable files, Gatfol also secures legacy hardware and OS (Windows XP and older) functionality and easy portability in instances of local machine OS upgrades.

The Gatfol standalone footprint can be easily incorporated into wider distributed processing architecture including full Hadoop-, as well as cloud based environments – with corresponding scalability in throughput performance.

RSS sourcing is widely scalable. Throughput has already been successfully tested at nine terabyte of web text per month.

Current best input-output performance of a 50-100 level deep Gatfol semantic crystallization stack on a standalone desktop (Intel Dual 2.93 GHZ 3.21GB RAM Windows XP) for text throughput is 3.6mb/hour for a single Gatfol cluster instance, 11.78mb/hour for a 10-cluster instance and 98mb/hour for a 100-cluster instance.

On a standalone desktop (Intel Quad 3.30 GHZ 2.91GB RAM Windows XP) best text throughput for a 50-100 level stack on a Gatfol 1000-cluster instance is 611mb/hour.

Total text throughput for a 50-100 level stack on an ordinary desktop Microsoft Networks-linked grouping of 20 desktops (Intel Single core 2.8GHZ 768MB RAM Windows XP) each running a Gatfol 100-cluster instance is 1.9GB/hour – giving maximum text output volume of 140GB/hour.

About Gatfol

Gatfol is the culmination of 12 years of work originating in the UK. Virtual auditing agents were developed using an intelligent natural language accounting system with neuro-physiological programmatic bases to penetrate, roam in- and report on patterns in financial data. This led to an EMDA Innovation in Software award from the European Union in 2006 and formed the basis of the Gatfol algorithms and technology currently in development.

Gatfol’s immediate aims are to improve search using semantic intelligence (meaning in data), both on the Web and in proprietary databases. Gatfol technology was provisionally patented in the USA in April 2011 and has PCT protection in 144 countries worldwide.

It is Gatfol’s vision to eventually enable humans to talk to data on all relevant interface devices.

Those interested in learning more about Gatfol technology can visit Gatfol Blog. For more information, contact Carl Greyling at Gatfol on +27 82 590 2993.