I remember reading somewhere online about a recent interview

(amongst many) with Ray Kurzweil – Director of Engineering at Google and the

world’s staunchest AI visionary – where he mentioned something about his plans in the line of…

“to get the Google computers to understand natural language, not just do search and

answer questions based on links and words, but actually understand the semantic content“

I cannot remember where I saw the interview and will Google it…

…but I have a problem…

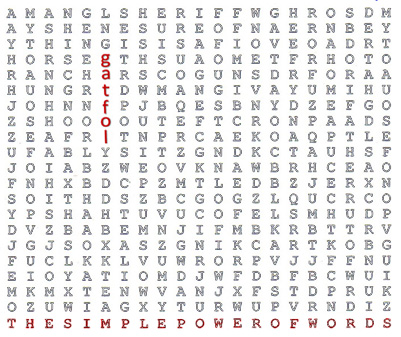

I know my keywords are fuzzy and I use Gatfol to show me some sample permutations on my input

…and realise that with 13 keywords and only 10 same-meaning single word replacements for each

keyword, as well as allowing for multiword to semantic equivalent multiword replacements, I am

looking at a minimum of 10 000 000 000 000 000 000 000 000 different ways to phrase my input

…all with the same basic meaning…

I also realise that I want a very specific search return that semantically equates to my input and

does not just hit and return pages corresponding to the trillions of different keyword combinations…

I quickly calculate that just by browsing my own thoughts and looking at my immediate

physical surroundings, I can theoretically come up with trillions upon trillions of meaningful

search input queries…each with trillions upon trillions of semantically equivalent phrasings…

The thought hits me that if Google wants to morph into Ray Kurzweil’s vision of a search

mind with which I can communicate and interrogate as if human – perhaps in five to ten years –

it will have to perform massively scalable multiword to multiword semantic equivalence transformations…

…Gatfol currently does this…